So here's a story that pretty much sums up where we're at with AI right now. A few weeks ago, I got completely obsessed with the idea of building my own computer user agent. You know, one of those AI systems that can actually control your computer like a human would—clicking buttons, filling out forms, navigating websites, the whole deal.

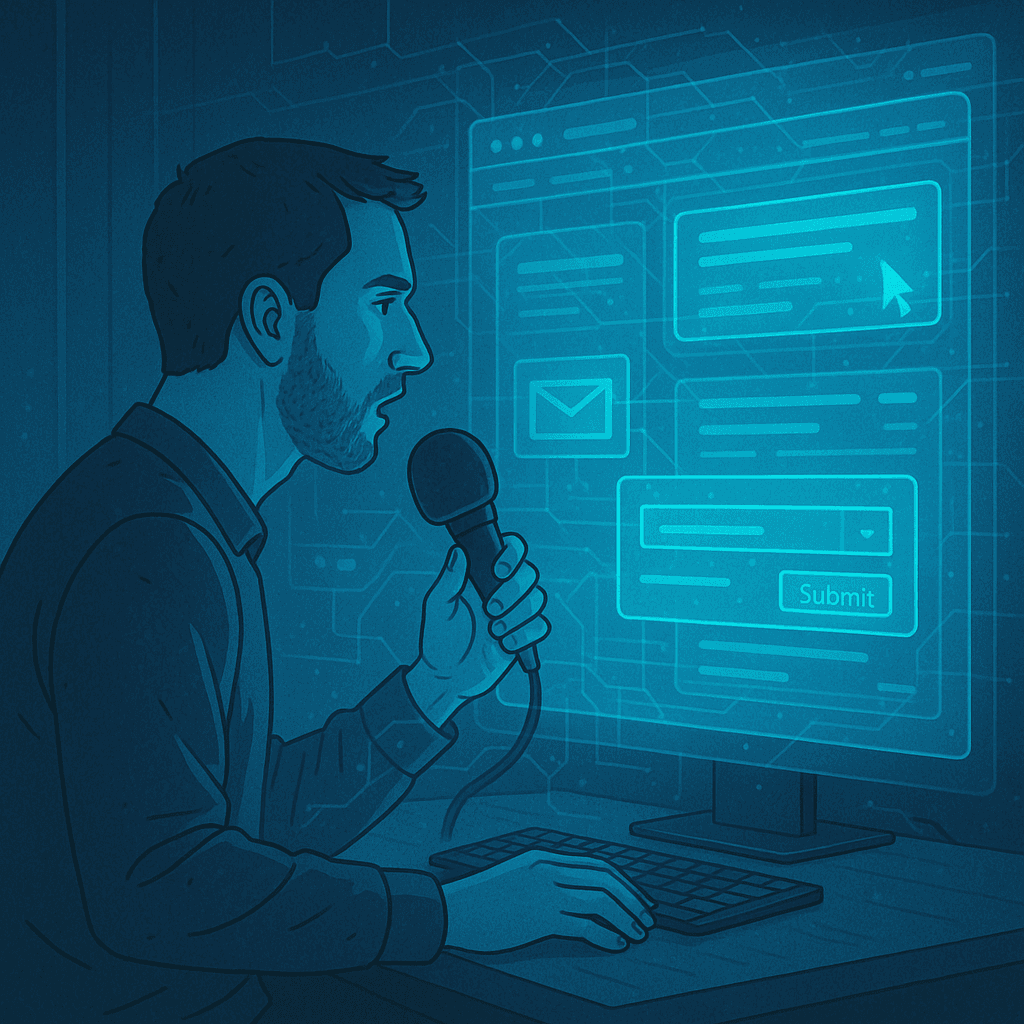

I spent a weekend hacking together this voice-controlled setup using Deepgram's live transcription API to capture my speech in real-time, then passing those commands directly to OpenAI's Computer Use API to guide the agent. The idea was simple: I'd just talk to my computer, tell it what I wanted it to do, and watch it happen. Sign into my email, book a restaurant reservation, maybe even help me manage some of my business tasks.

And honestly? When it worked, it felt like living in the future. There's something genuinely magical about saying "go to Gmail and check my unread messages" and watching your cursor move around the screen, clicking and scrolling just like you would. It was like having JARVIS from Iron Man, except... well, except it crashed constantly, moved at the speed of molasses, and would randomly decide to click on completely wrong things.

But here's the thing—even with all those frustrations, I couldn't stop thinking about the potential. Because what I built in a weekend, as janky as it was, gave me a glimpse into what I think will be one of the most transformative technologies of the next decade. We're not quite there yet, but we're close enough that I can see the finish line.

What Are Computer User Agents? (The Cool Part)

Let me back up and explain what we're actually talking about here. Computer user agents—or CUAs as some people call them—are AI systems that can interact with computers the same way humans do. Instead of needing specialized APIs or custom integrations for every single application, these agents literally "see" your screen, understand what's on it, and can click, type, and navigate just like you would.

OpenAI's version, which they call "Operator," represents their boldest move yet into this space. Rather than just being a chatbot that answers questions, Operator can actually go out and do things for you on the web. Want to order groceries? It can navigate to your grocery delivery app, add items to your cart, and complete the checkout. Need to book travel? It can compare flights across multiple sites and handle the entire booking process.

The broader landscape is heating up fast too. Anthropic has their "Computer Use" capability, Google just announced Project Mariner, and you can bet every major tech company is working on something similar. This isn't just about automation—it's about completely changing how we interact with technology. Instead of learning how to use dozens of different apps and websites, you just tell an AI what you want, and it figures out how to make it happen.

My Experience: The Promise and the Reality

Back to my weekend project. The setup was actually pretty straightforward technically. Deepgram's live transcription is incredibly good—it was picking up my voice commands with impressive accuracy, even when I was talking fast or being casual about it. The real magic happened when those transcribed commands hit OpenAI's Computer Use API.

When everything aligned perfectly, it was genuinely impressive. I'd say something like "check my Twitter notifications," and I'd watch as the agent opened a new tab, navigated to Twitter, logged in (I had saved credentials), and started scrolling through my notifications. The visual understanding was surprisingly sophisticated—it could distinguish between different UI elements, understand context about what was clickable, and even handle some basic troubleshooting when pages loaded slowly.

But man, the reliability issues were real. I'd estimate it successfully completed tasks maybe 60-70% of the time, which sounds decent until you realize that means nearly every third task just... failed. Sometimes it would get confused by pop-ups or cookie banners. Other times it would click on the wrong element—I watched it try to type my password into a search box instead of the password field more times than I care to count.

The speed was probably the biggest practical limitation though. What would take me 30 seconds to do manually—like checking and responding to a few emails—would take the agent 3-4 minutes. Part of that was the API latency, part was the careful, methodical way it approached each task. Honestly, there were times I could have done the task faster myself, then made a sandwich, and still finished before the agent was done.

But here's what kept me excited despite all the frustrations: when it did work, it worked on websites and applications I had never specifically trained it for. That's the real breakthrough here. This wasn't some rigid automation script that breaks the moment a website changes their layout. This was general intelligence applied to computer interfaces.

The Technical Challenges (Why It's Hard)

The more I played with my voice-controlled agent, the more I appreciated just how complex this problem really is. Think about what happens when you use a computer. You're constantly processing visual information, understanding context, making decisions based on incomplete information, and adapting to unexpected situations. Now imagine trying to teach an AI system to do all of that reliably, across thousands of different websites and applications, each with their own quirks and design patterns.

The visual understanding alone is incredibly complex. Modern websites are full of dynamic content, pop-ups, loading states, and responsive designs that change based on screen size. What looks like a button to a human might be implemented as a div with click handlers, or could be partially obscured by other elements. The agent has to understand not just what's visible, but what's actually interactive and how to interact with it effectively.

Then there's the context problem. Humans are incredibly good at maintaining context across multi-step tasks. If I'm booking a flight, I remember that I'm looking for specific dates, prefer window seats, and have airline loyalty preferences. I can adapt if the first website doesn't have what I want and seamlessly move to another option. Teaching an AI to maintain that kind of contextual awareness across potentially lengthy workflows is genuinely difficult.

Where This Is All Heading

Despite the current limitations, I'm convinced we're on the verge of something massive here. The fundamental breakthroughs needed—better visual understanding, more reliable action planning, faster processing—are all actively being worked on by teams with essentially unlimited resources. And the market demand is enormous.

Think about the economic implications alone. Every business that currently employs people to do repetitive computer tasks is a potential customer. Customer service, data entry, content moderation, testing, administrative work—there are millions of jobs that essentially involve navigating computer interfaces all day. Computer user agents won't replace all of those roles, but they'll definitely transform them.

For developers, this could be the end of the API integration nightmare. Instead of spending weeks building custom connections between different services, you could just describe what you want to happen and let an agent figure out the implementation details. Testing could become as simple as describing user scenarios in natural language.

And for regular users? We're talking about a world where technology actually adapts to you, rather than forcing you to learn dozens of different interfaces. Your computer becomes more like a capable assistant that can handle the tedious stuff while you focus on the creative and strategic work.

I think we're maybe 6-18 months away from computer user agents becoming genuinely useful for everyday tasks. The pieces are all there—they just need to get faster, more reliable, and better at handling edge cases. But when that happens, it's going to change everything about how we interact with technology.

Computer User Agents: OpenAI's Bold Step Into the Future (And Why It's Not Quite Ready Yet)

My hands-on experience building a voice-controlled computer agent using OpenAI's Computer Use API, exploring both its incredible potential and current limitations in reliability and performance.

Aslan Farboud

7 min read